- Heisenberg goes deep: DragGAN is A New Way to Control Image Generation

- How to manipulate images with just a few clicks?

- What is a Generative Adversarial Network?

- Problem Statement Targeted by DragGAN :

- How DragGAN works?

- What DragGAN can do?

- How to use DragGAN? – Yet to be publically available

- Why DragGAN matters? Potential Applications

- Source Code is also available now!

Heisenberg goes deep: DragGAN is A New Way to Control Image Generation

How to manipulate images with just a few clicks?

Have you ever wanted to change the pose, shape, expression, or layout of an image (see our other related blog/video)? Maybe you want to make a cat look angrier, or a car more aerodynamic, or a landscape more scenic. Or maybe you want to create something entirely new by combining different elements from different images. DragGAN is a new way to control image generation and it can give Adobe Photoshop a run for its money.

You might think that you need some advanced photo editing software or skills to do that. But what if I told you that there is a simpler and more powerful way to manipulate images, using a technique called generative adversarial networks (GANs)?

What is a Generative Adversarial Network?

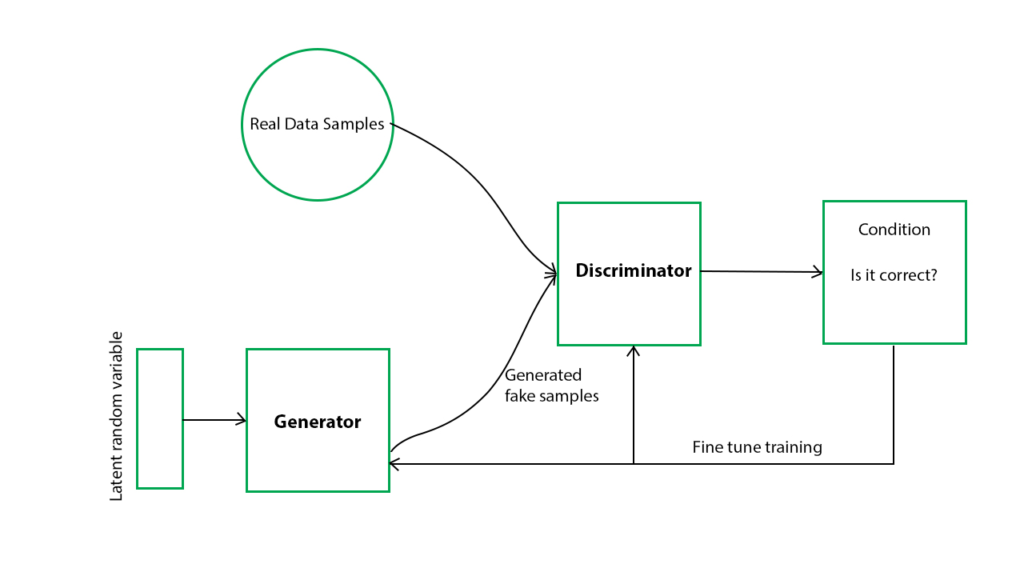

GANs are a type of machine-learning model that can generate realistic images from random noise. They can also learn the underlying structure and distribution of a large dataset of images, such as animals, cars, humans, landscapes, etc. This means that they can produce images that are consistent with the style and content of the dataset, but also have some variation and diversity.

Problem Statement Targeted by DragGAN :

But how can we control what kind of images GANs generate? How can we make them follow our specific needs and preferences? Existing approaches rely on manually annotated training data or a prior 3D model, which often lack flexibility, precision, and generality. For example, if we want to change the pose of an animal, we need to have labels for the joints and limbs of each animal in the dataset. Or if we want to change the shape of a car, we need to have a 3D model of the car and its parts.

In this blog post, I will introduce you to a new way of controlling GANs, that is, to “drag” any points of the image to precisely reach target points in a user-interactive manner. This is based on a recent research paper by Xingang Pan et al., accepted to SIGGRAPH 2023.

The authors propose DragGAN, a system that allows anyone to deform an image with precise control over where pixels go, thus manipulating the pose, shape, expression, and layout of diverse categories such as animals, cars, humans, landscapes, etc.

Drag Your GAN: Interactive Point-based Manipulation on the Generative Image Manifold (mpg.de)

How DragGAN works?

DragGAN consists of two main components: 1) a feature-based motion supervision that drives the handle point to move towards the target position, and 2) a new point tracking approach that leverages the discriminative generator features to keep localizing the position of the handle points.

Feature-based motion Supervision

The first component is based on the idea that moving a point on the image should correspond to moving a point on the feature map of the generator. The feature map is a representation of the image at an intermediate layer of the generator network. It contains information about the shape and appearance of the objects in the image. By modifying the feature map at a specific location, we can change how the generator outputs the image at that location.

Point Tracking Approach leveraging discriminative generation

The second component is based on the idea that tracking a point on the image should correspond to tracking a point on the feature map of the discriminator. The discriminator is another network that tries to distinguish real images from fake ones generated by the generator. It also contains information about the structure and distribution of the images in the dataset. By comparing the feature map at different locations, we can find where the point has moved.

By combining these two components, DragGAN can achieve interactive point-based manipulation on the generative image manifold. The generative image manifold is a high-dimensional space where each point represents an image generated by the GAN. By moving along this space, we can explore different variations and possibilities of image generation.

What DragGAN can do?

DragGAN can manipulate images in various ways that are difficult or impossible for existing methods. For example:

- It can hallucinate occluded content and deform shapes that consistently follow the object’s rigidity. For instance, it can make a cat’s tail longer or shorter without breaking its continuity or realism.

- It can handle multiple points simultaneously and preserve their relative positions and orientations. For instance, it can make a car’s wheels bigger or smaller while keeping them aligned with the body.

- It can handle diverse categories and scenarios without requiring any category-specific annotations or models. For instance, it can make a human’s face more expressive or change their hairstyle.

- It can also manipulate real images through GAN inversion. GAN inversion is a technique that finds the closest point on the generative image manifold for a given real image. By doing so, we can transfer the real image into the domain of the GAN and apply DragGAN to it.

Here are some examples of DragGAN’s results:

Figure 1: Examples of DragGAN’s results.

The first row shows original images generated by StyleGAN2. The second row shows manipulated images by DragGAN. The red dots indicate handle points and their target positions.

How to use DragGAN? – Yet to be publically available

DragGAN is easy to use and does not require any programming skills or prior knowledge about GANs. All you need is a web browser and an internet connection.

To use DragGAN, you can visit their project page and follow these steps:

- Choose one of the pre-trained GAN models from different categories (e.g., animal faces, cars).

- Click on “Generate” to get an initial image from the GAN model.

- Click on any point on the image to create a handle point.

- Drag the handle point to any desired position on the image.

- Click on “Update” to see how DragGAN modifies the image according to your manipulation.

- Repeat steps 3-5 as many times as you want until you are satisfied with your result.

- You can also click on “Invert” to upload your own image and apply DragGAN to it.

Why DragGAN matters? Potential Applications

DragGAN is not only fun and cool but also has many potential applications and implications for various domains and fields.

For example:

- Creative content generation and editing for art, entertainment, education, etc.

- Data augmentation and synthesis for machine learning tasks such as classification, segmentation, detection, etc.

- Interactive exploration and analysis of generative models and their latent spaces.

- Studying human perception and cognition of visual content and its manipulation.

DragGAN is also an important step towards achieving more flexible and precise controllability of GANs and other generative models. It opens up new possibilities and challenges for future research and development in this area.